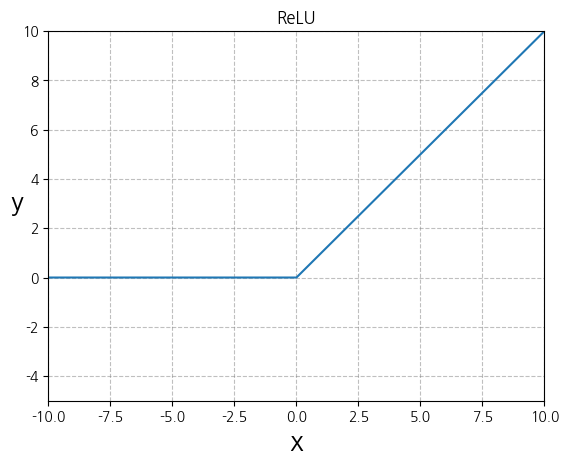

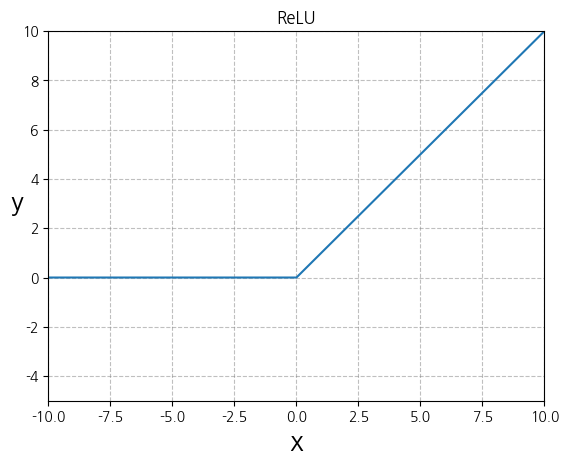

ReLU

Rectified Linear Unit

$$ ReLU(x) = max(0,x) $$

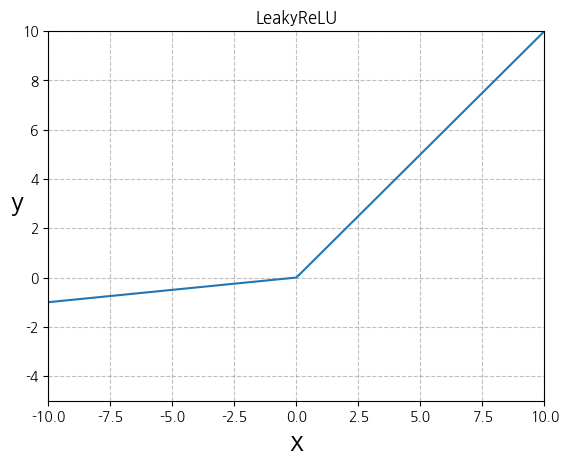

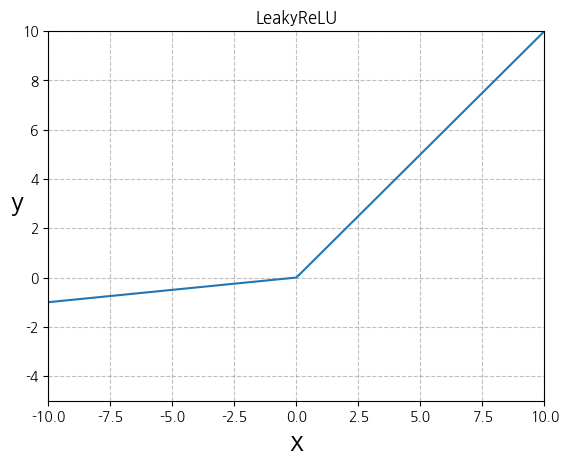

LeakyReLU

$$ LeakyReLU_{\alpha} = max(\alpha x, x) $$

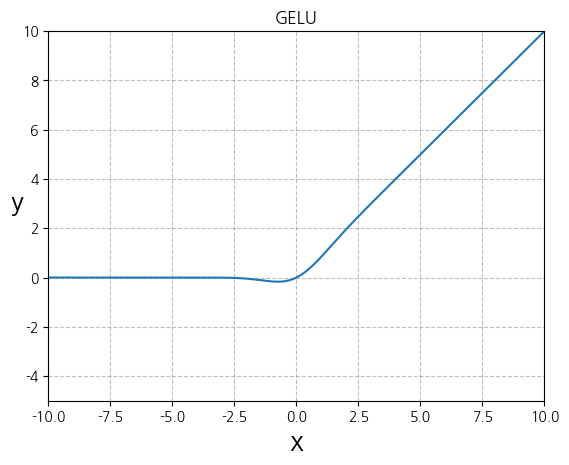

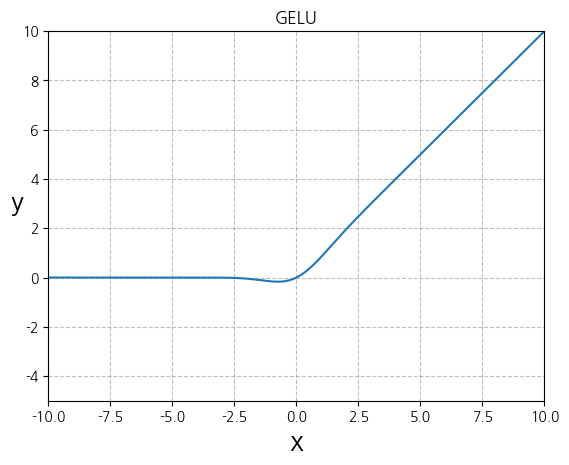

GELU

Gaussian Error Linear Unit

$$ GELU(x) = 0.5 \cdot x \cdot (1 + \tanh(\sqrt{2/\pi} \cdot (x + 0.044715 \cdot x^3))) $$

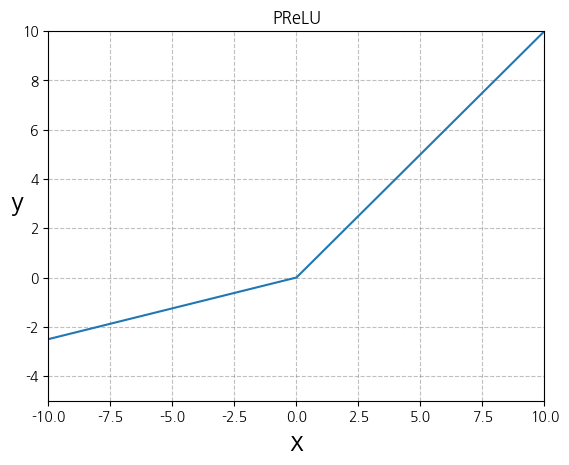

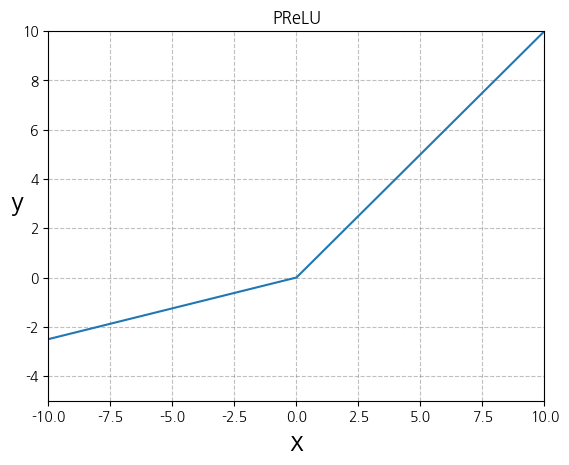

PReLU

Parametric ReLU

$$ PReLU(x) = \max(0, x) + a \cdot \min(0, x) $$

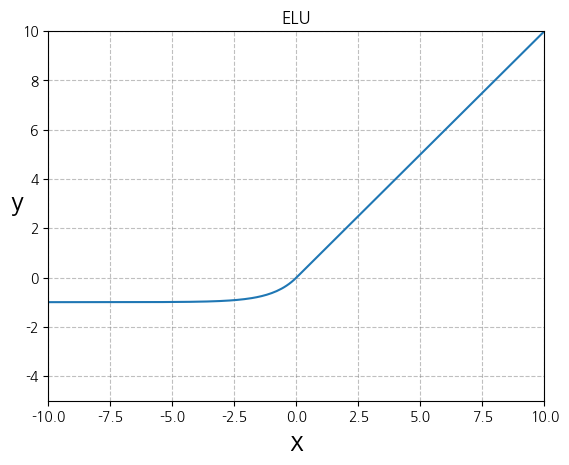

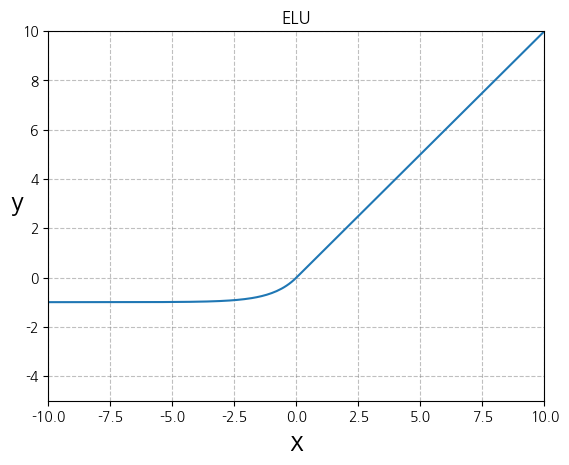

ELU

Exponential Linear Unit

$$ ELU_{\alpha} = \begin{cases} \alpha (\exp(x) - 1) & \text{if } x < 0 \\ x & \text{if } x >= 0 \end{cases} $$

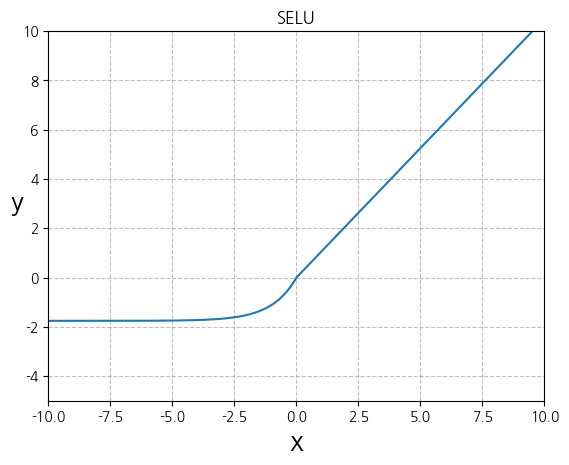

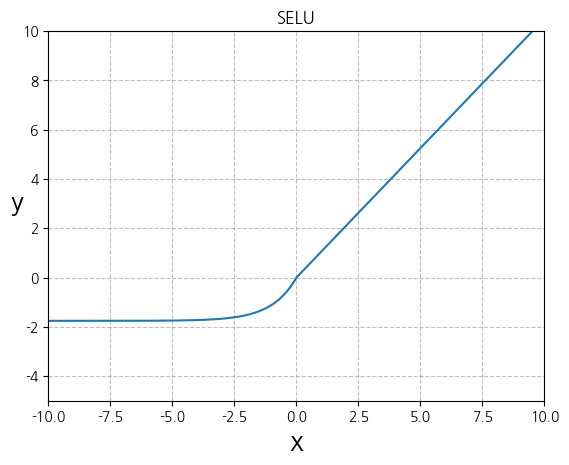

SELU

Scaled Exponential Linear Unit

$$ SELU(x) = \text{scale} \cdot (\max(0, x) + \min(0, \alpha \cdot (\exp(x) - 1))) $$

'ML & DL > 기초 이론' 카테고리의 다른 글

| Transfer Learning & Knowledge distillation (0) | 2023.03.31 |

|---|---|

| Parameter Estimation (모수 추정), 가능도 (Likelihood), MLE (Maximum Likelihood Estimation) (0) | 2023.03.22 |

| Probability Model(확률 모형), Random Variable(확률 변수) (0) | 2023.03.22 |

| Regularization: Overfitting을 해결하는 방법들 (0) | 2023.03.21 |

| Overfitting & Underfitting (0) | 2023.03.21 |

ReLU

Rectified Linear Unit

$$ ReLU(x) = max(0,x) $$

LeakyReLU

$$ LeakyReLU_{\alpha} = max(\alpha x, x) $$

GELU

Gaussian Error Linear Unit

$$ GELU(x) = 0.5 \cdot x \cdot (1 + \tanh(\sqrt{2/\pi} \cdot (x + 0.044715 \cdot x^3))) $$

PReLU

Parametric ReLU

$$ PReLU(x) = \max(0, x) + a \cdot \min(0, x) $$

ELU

Exponential Linear Unit

$$ ELU_{\alpha} = \begin{cases} \alpha (\exp(x) - 1) & \text{if } x < 0 \\ x & \text{if } x >= 0 \end{cases} $$

SELU

Scaled Exponential Linear Unit

$$ SELU(x) = \text{scale} \cdot (\max(0, x) + \min(0, \alpha \cdot (\exp(x) - 1))) $$

'ML & DL > 기초 이론' 카테고리의 다른 글

| Transfer Learning & Knowledge distillation (0) | 2023.03.31 |

|---|---|

| Parameter Estimation (모수 추정), 가능도 (Likelihood), MLE (Maximum Likelihood Estimation) (0) | 2023.03.22 |

| Probability Model(확률 모형), Random Variable(확률 변수) (0) | 2023.03.22 |

| Regularization: Overfitting을 해결하는 방법들 (0) | 2023.03.21 |

| Overfitting & Underfitting (0) | 2023.03.21 |